Back in 2012 I wrote about the private framework around Remote View Controllers, hinting that developers should keep this in front of their mind. Through iOS 8 this became extensions, and with the Apple Watch, this is the main interface for Apple Watch, at least until WWDC 2015.

On the Debug podcast episode 57 Guy & co mentioned that Apple TV is a prime target for extensions, and I agree, but let’s think it over. For my case, I have a kid and no time to watch TV. I use the TV mainly to stream music just like I would with an Airport Express (why, oh why, is this not in the Airport Extreme and Time Capsules! And why, oh why, has the Apple TV and Airport Express not merged yet? And neither have they been updated in forever. Sigh….). What is a killer feature here, and I really did not expect this to be anything of interest when I bought it, is that if I turn the TV on while playing music, it will also show the most recent photos I’ve taken with my iPhone, which means up-to-date photos of my kid. That’s really awesome, and makes both my wife and I stop, look and enjoy. Oh, and my boy likes it too. :-) The second big thing is AirPlay, being able to share my screen on the TV. And this is the niche that the Apple TV Extensions could carve out a niche in, as I see it.

Take Adobe Lightroom. I love that they have brought it to the iPad, as I have my 160k photos stored and arranged there since around Lightroom 2.0. There is tons I would want them to work on in the app, and of course, showing photos on the TV is a part of that. At the moment, my only choice is AirPlay, making what I do and what my parents see while visiting the same thing. We´ve had presentation tools like KeyNote and PowerPoint give us the option of separate screen output for ages, so this seems klunky today.

But when would the screen extension fire off? With extensions on your phone or mac it’s easy, just fire when it seems appropriate. With the Apple Watch, if you’re it’s close by, you’re wearing it and the phone can show stuff there. But with the AppleTV, most time when I’m nearby the TV is off, or it is not the source being displayed. So there needs to be some mechanism of alerting the app when it would be proper to use it, or for the app to request to be able to use this display.

That got me thinking, where else would I like content to be displayed? Sure, Google has shown that glasses is a valid target, HTC has shown the same for phone covers. I can imagine a lot of places, and not all places are places where I think Apple would get into. Would it be worth it to Apple to allow third parties hosting remote view controllers (sorry Apple Marketing, extensions). That would for instance open up the possibility that my window manufacturer could allow apps to project something into my window. Or refrigerator. Or whatever. Would that be a thing for Apple, just like with MFi, and would that give me as a customer value?

Yesterday, WatchKit became available for us developers, and as far I can tell, open for the entire world to see, which is a first for Apple at least since the iPhone introduction. I thought it’d be good to share my first impressions.

I remember the second day the first official iPhone SDK had entered beta. It was new. It was fresh. At the same time it seemed well thought out. It was opinionated. It laid a path of where we would go. And it was a very nice framework on top of BSD. I was thrilled!

WatchKit doesn’t quite feel this way. The first thing I noticed that “the way it’s done” in iOS is not the same on WatchKit. For instance, there is no autolayout. Autolayout is THE way to do dynamic layout, Apple has been pushing it hard. Yet it’s nowhere to be found on WatchKit. It’s older brother, springs and struts, is also missing in action. Instead we have a swing-like layout system of stacked elements, either stacked horizontally or vertically. Heights and widths are explicit, yet the watch comes right of the bat in two screen resolutions. So there’s the interface dynamicism out the window.

Despite Apple pushing Swift heavily, the first examples they choose to show, being the examples in the demo video at http://developer.apple.com/watchkit, are all Objective C. Sure, they provide demos in both Objective C and Swift, but I’m surprised they’re not keeping the message focussed.

Controls are not UIViews, and they don’t seem to be layer backed. This means no Core Animation, and the result is that all animations have to be a series of pre-rendered images, rather than smooth images set up to be run on the fly. And yes, that means pre-rendered animations in two sizes, for 38mm and 42mm.

Is it running BSD? No idea. This is probably only important to my nostalgia as I can’t remember having dived directly to the BSD layer the last year. So it’s probably just my security blanket, but I like it being there and being available to my native app. But the WatchKit apps are not native. They are resources pushed on the phone and managed by the phone. All the logic is on the phone, the watch will only show resources already there, plus a tiny little bit of content generated on the fly by the phone and pushed over via what I expect is Bluetooth. So it’s not native, it’s more like a puppet theatre. That’s why map components in our apps cannot be dynamic. I’m sure Apple’s apps are native, so we’re really back to the iPhoneOS 1.1 situation where we were only allowed to make webapps. I hope Apples apps have the BSD layer available. But I would very much like to have that too. Perhaps with version 2.0?

But yes, we got a lot more than we expected. What I and many with me expected was the ability to make “glances”, but we also got notifications and we got these puppet-theatre apps. That gives us an awful lot of tools to begin with. Truth be told I can probably implement all the ideas I had so far with this. It’s going to need more work than I expected with the resolution-dependent layout, but much can still be done and I expect we’ll see a bunch of awesome apps.

The limitations, though, make me even more curious as to what kind of computer the S1 chip really is.

One thing I found really interesting is the UITableView replacement. UITableView is an old beast that’s been our bread and butter for a very long time. I look forward to giving it a run and see what kind of abuse it can withstand. With no scroll views, this one looks to be a target for an awful lot of experimentation in the months to come.

Dear John,

thanks for the great show (ATP), I look forward to every episode the three of you do. In the episode I listened to today (#53) you guys talked about ObjC moving forward, and you mentioned Erlang a few times.

Two small disclaimers first, (1) I’m very new in the world of Erlang, and (2) the company I work for has subsidiaries that only focus on Erlang, and shares in companies whose products are built on top of Erlang. I like to believe I’m not influenced by this, but I am influenced, amongst others, by my CTOs passion for the ecosystem.

Erlang is two things: a VM called BEAM, and a language. The language is not to my taste, but I really like the VM. Lucky for me, there is a new language called Elixir that runs on the Erlang VM as a first class citizen.

What I really like with writing for this ecosystem is that it launches a ton of green threads instead of GCD threads, and these processes do true (shared nothing) message sending between them. The actor model is back!

Elixir has other fun stuff too, such as piping function calls as if they were commands in the terminal. So far, I find myself writing a bunch of pattern matching for the work I want it to do, in a more terse yet easy-to-read way than I’m used to coming from ObjC and the usual suspects of languages before that.

I think you’d find it interesting diving into the Elixir and Erlang VM combination. The take-away I’ve got that I’d love to bring back to ObjC would to be (1) even tighter on making everything immutable, (2) introduce green threads where for instance singletons can live, and (3) make a objc_msgSend that sends messages between threads not containing pointers to data, but an actual message, and having the sending process being able to continue with its logic until it needs the answer back where it can block and wait if there is no reply yet.

This was a bit longer than a 140 character tweet, but there you go. Oh, and to tie this together with the news, I only noticed Whatsapp because they sponsor conferences and give talks on how they built their backend in Erlang.

The past month or so I’ve reviewed a lot of code, and one issue is cropping up all the time: too much use of @property

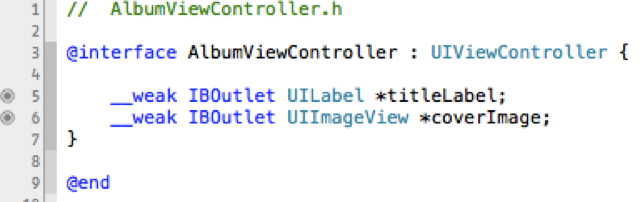

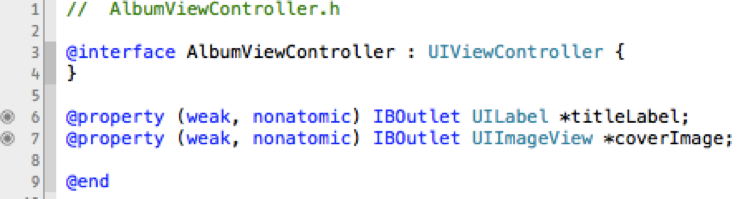

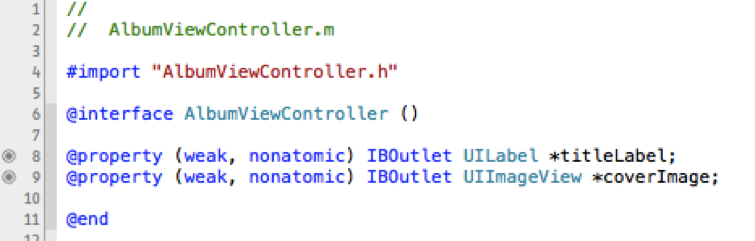

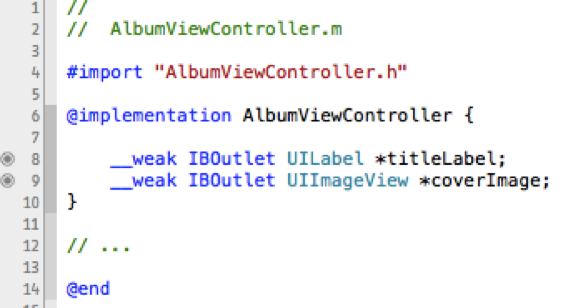

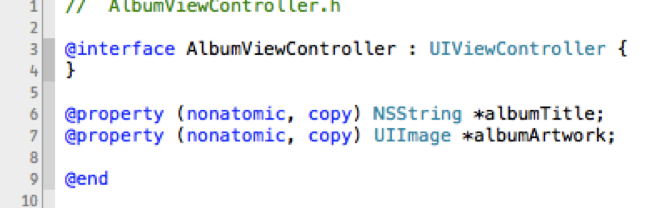

Suppose we have the class AlbumViewController that extends UIViewController, and is used to display information about a music album. In the view, we want to display a title, and some artwork. So we make our storyboard, and drop a UILabel and UIImageView into a view controller of the class AlbumViewController. Now, how do we hook them up? We’ve got really four alternatives:

Objective C, in Apple’s incarnation, doesn’t have any sense of private, protected and public variables and methods: everything is public.

Instance variables are accessible only to the class, and its subclasses.

Properties are accessible from any object that has a reference to the object that exposes these properties. This is the main reason for having properties. The second reason is if you want to have the getting or setting of a property to have some side-effects.

So, in the example above, if we choose properties, hidden or explicitly public, we invite other objects to manipulate these properties directly. Thus we need to inspect our interface: does it make sense to expose the UILabel and UIImageView directly? Can the class AlbumViewController handle that other objects will manipulate these views? Probably not.

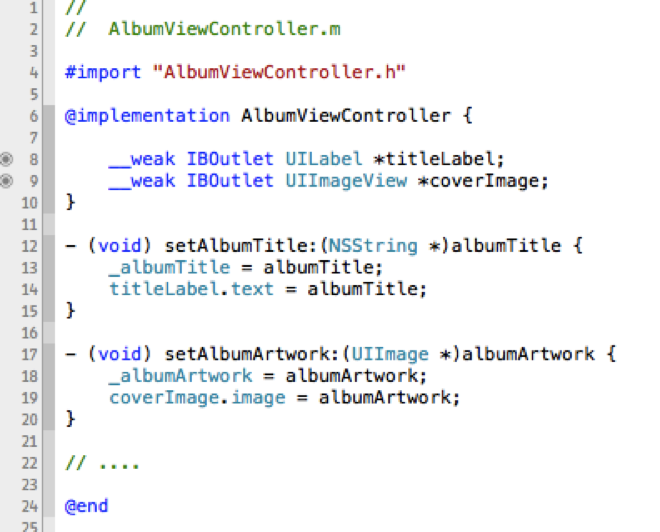

Properties that would make sense, from a class interface perspective, would be setting the album title and artwork. Then you can keep the presentation of these as an implementation detail:

This way you have nice encapsulation, and thus make your class easier to use for the next person coming in to your project, or your self next week.

My issue with alternative #2 and #3 is that they are really the same: they expose the properties to the world, even though alternative #3 tries to obscure it a bit. If you write any kind of dynamic code using just the tiniest bit of class introspection, the methods will offer their service straight away. So unless your interface is to expose these properties, don’t use that. The only thing you’ve gotten extra out of this is having to ask people not to use your properties, and a slightly added cost to accessing your instance variables.

Alternative #1 is good if you want the instance variables to be available to subclasses, and make it clear that these should be considered. Again, there’s not all that much difference between this and alternative #4, but #1 is more explicit and readable, so it’s a great place if you expect it to be useful for subclasses.

Alternative #4 is my preference for everything that is just something I need to get my implementation of this class done. And I really think it should be yours as well, and is what should be taught in basic iOS training. Unfortunately, surprisingly many go for alternative #3, cluttering the interface with lots and lots of “hidden” properties, that aren’t hidden at all, especially not at runtime.

So to sum up: think about your interface and what you want to expose to the world. Expose only this, keep everything else as an implementation detail, unless you expect the class to be subclassed. Then you can expose some of the instance variables in your interface as well.

As a final PS, we’re not doing the compiler any favour going into these details, it will get its work done anyhow. But this is so that we can keep a clear interface when communicating with other developers coming into the project, using the project, and to your future self that is working on the project.

Now that I’ve posted my view, I would love to hear your opinions, especially because I would love to hear some good arguments in favour of alternative #3.

Finally, after having sent mails to people high up at Apple, signing petitions, joining groups and blogging about it for many years, iTunes movie rental and purchase is coming to Scandinavia, possibly the world. That is great, we have been missing it for ages. Thank you, Apple, for allowing us to send you money ![]() Like many other people, I’m looking forward to trying out this service, especially the rental service.

Like many other people, I’m looking forward to trying out this service, especially the rental service.

But what this makes me really happy about is that I now am actually optimistic about iTunes Match being not only a US product. So far, we have had no access to iTunes movies, tv-shows, Apple’s iBookstore and probably other similar features I forget. It even took for ages for the AppleTV to get to Denmark, I bought mine in Australia. But iTunes Match is exciting, and will hopefully be a really great showcase for iCloud. October 4th will be exciting in its own right, but the movies becoming available makes me excited that there will be less US-only services and more open-for-the-world services.

Follow Me

Follow me online and join a conversation