Dear iOS dev,

if you haven’t already read Ole Begemann’s article on Remote View Controllers (and part 2 and part 3), you should do so now. I think and hope this will be one of those bread-and-butter features of iOS 7, so now would be a great time to think of where XPC and remote views fit into your existing and upcoming apps.

Last week I made sure I have good backups of my iMac. I usually do, but I wanted to make doubly sure, as this weekend, I would nuke my disks to make a fusion drive on my iMac from 2011.

When the iMac was released in 2011 with the Z68 chipset, a SSD and a magnetic disc, I was excited, as I was sure Apple would exploit the caching part of the chipset, using 64GB of the SSD for caching. Alas, they didn’t. So I had to move files to “strategic places” and try to get my computer to act as quick as it could, trying to have it rely more on the SSD than the spinning disc.

The announcement of the fusion drive made me very happy, as it seemed that Apple would 1-up the Z68. The fusion drive would keep what was used a lot on my SSD, and use both drives as one combined drive, so no more moving files around manually and symlinking in between. Yay! But, alas, this would only be for the iMac 2012 and the Mac Mini 2012. Luckily, within long, Patrick Stein had shown us all how to use Core Storage to make our own fusion drive.

Creating the fusion drive was easy: boot from a USB stick with Mountain Lion 10.8.2, switch to Terminal and do

diskutil cs create Fusion disk0 disk1 diskutil cs list diskutil cs createVolume <UUID> jhfs+ Drive 2288g

The hard part was finding out what would be the best amount of disk space to set up for the drive, but luckily it was equally easy to delete the fusion drive, so after a few iterations I had made my setup as close to 100% as I thought was good. (26.5MB left)

After completing that, I wanted to restore from Time Machine, but alas, that did not work. So I did a fresh install of Mac OS X instead, and then restored from Time Machine. Much better, that worked without a hitch. The only thing to think about there was that while I had backed up two drives, I now only had one drive, so it restored one drive, and I had to move over the data from the second one. No problem, but worth noting.

At this point, I had my iMac from 2011, a 3.4Ghz i7 with 16GB RAM, booting from a fusion drive built of the 256GB SSD and 2TB drives it was shipped with. This is an awesome setup! The sound from my 2TB drive has always been quite noticeable, and it has been quite silent this weekend. I’ve been running “iostat -w 1 disk0 disk1” all the time, and the 2TB drive has roughly 1/80th the amount of disk access that the SSD has!!! That is amazing, and a whole lot better than what I achieved with my manual setup! ![]()

Also, I’ve had two kernel panics during the weekend. I cannot confirm that this is because of the fusion drive, but in the interest of full disclosure, I think I should bring it up. Patrick was very clear to say he would not recommend using this in a production system. I use it now on my home system, and I probably will for a while, but I have great backups, other machines to work on if this one fails, and a habit of living on the cutting edge. ![]()

My conclusion is that you should read Patrick’s writings and make up your mind if this is something you want to do. For my part, I have an iMac now that works exactly the way I want it to work, especially if I don’t get any more kernel panics. Of course, Apple doesn’t support this one bit, so if they make an update next month that kills of support for older macs, I’m out of luck. Don’t use this if you don’t have great backups, and don’t use this if you can’t afford your computer to be offline while you reinstall it and restore from backup. I have great backups and fall-back, so I’ll be using this setup, and report back on my dealing with it.

A last resource to mention is Andres Petalli’s write-up, there are a couple of good comments there as well.

The past month or so I’ve reviewed a lot of code, and one issue is cropping up all the time: too much use of @property

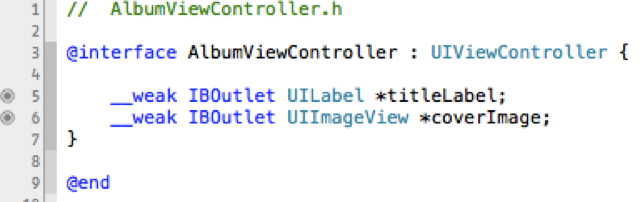

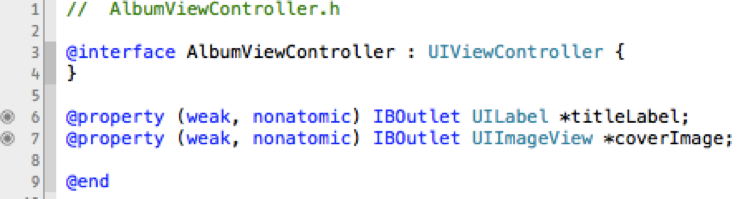

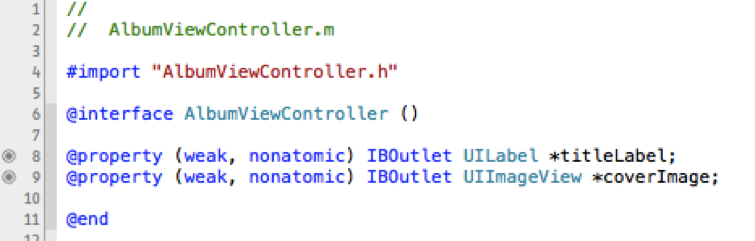

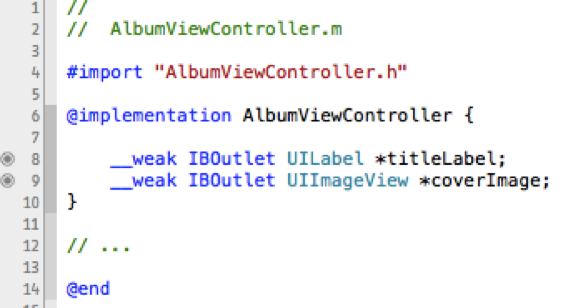

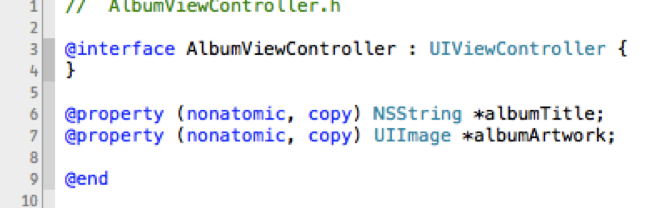

Suppose we have the class AlbumViewController that extends UIViewController, and is used to display information about a music album. In the view, we want to display a title, and some artwork. So we make our storyboard, and drop a UILabel and UIImageView into a view controller of the class AlbumViewController. Now, how do we hook them up? We’ve got really four alternatives:

Objective C, in Apple’s incarnation, doesn’t have any sense of private, protected and public variables and methods: everything is public.

Instance variables are accessible only to the class, and its subclasses.

Properties are accessible from any object that has a reference to the object that exposes these properties. This is the main reason for having properties. The second reason is if you want to have the getting or setting of a property to have some side-effects.

So, in the example above, if we choose properties, hidden or explicitly public, we invite other objects to manipulate these properties directly. Thus we need to inspect our interface: does it make sense to expose the UILabel and UIImageView directly? Can the class AlbumViewController handle that other objects will manipulate these views? Probably not.

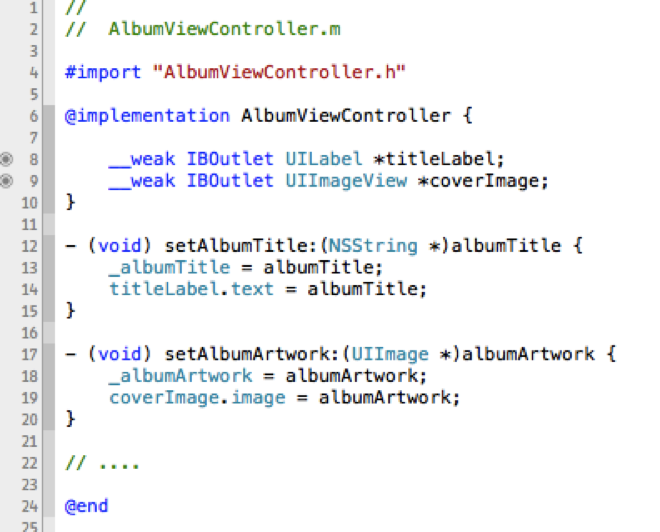

Properties that would make sense, from a class interface perspective, would be setting the album title and artwork. Then you can keep the presentation of these as an implementation detail:

This way you have nice encapsulation, and thus make your class easier to use for the next person coming in to your project, or your self next week.

My issue with alternative #2 and #3 is that they are really the same: they expose the properties to the world, even though alternative #3 tries to obscure it a bit. If you write any kind of dynamic code using just the tiniest bit of class introspection, the methods will offer their service straight away. So unless your interface is to expose these properties, don’t use that. The only thing you’ve gotten extra out of this is having to ask people not to use your properties, and a slightly added cost to accessing your instance variables.

Alternative #1 is good if you want the instance variables to be available to subclasses, and make it clear that these should be considered. Again, there’s not all that much difference between this and alternative #4, but #1 is more explicit and readable, so it’s a great place if you expect it to be useful for subclasses.

Alternative #4 is my preference for everything that is just something I need to get my implementation of this class done. And I really think it should be yours as well, and is what should be taught in basic iOS training. Unfortunately, surprisingly many go for alternative #3, cluttering the interface with lots and lots of “hidden” properties, that aren’t hidden at all, especially not at runtime.

So to sum up: think about your interface and what you want to expose to the world. Expose only this, keep everything else as an implementation detail, unless you expect the class to be subclassed. Then you can expose some of the instance variables in your interface as well.

As a final PS, we’re not doing the compiler any favour going into these details, it will get its work done anyhow. But this is so that we can keep a clear interface when communicating with other developers coming into the project, using the project, and to your future self that is working on the project.

Now that I’ve posted my view, I would love to hear your opinions, especially because I would love to hear some good arguments in favour of alternative #3.

For a project I’m doing at work, that I hope will eventually be open source, I needed to have protobuf compiled for iOS. A colleague of mine showed me how it had been compiled on iOS 4, using these scripts, but with iOS 5 I ended up with binaries compiled for the arm architecture instead of the armv7 architecture.

Be aware that the iOS 5 SDK actually ships with a version of protobuf, but it’s a bit old, being version 2003001. And it only ships the binary, not the headers.

To compile protobuf, grab the latest source (which is 2.4.1 at the time of writing this) and run the following script (download script):

After running this, you should have a directory called /tmp/protobuf/arm that is compiled for your iPhone or iPad with the armv7 architecture. Copy that into your project and start using protobuf ![]()

Tomorrow I begin work at Trifork! ![]() There I’ll be doing iOS development, so before I begin I thought I’d like to share a bit about how I do my development now.

There I’ll be doing iOS development, so before I begin I thought I’d like to share a bit about how I do my development now.

First of all, I use GitHub and Beanstalk for source control, depending on what client the work is for (for my own projects, I use GitHub). Mercurial is nice, but git and svn just work with XCode, so I stick to that.

Since I have source control, I can have continuous delivery. For that I use Jenkins. Jenkins is not good enough. It’s not great. It’s not beautiful. It’s not intelligent, easy, friendly, intuitive, or all those other nice words. But it works! I use the Clang Scan-Build, Github OAuth, Github, Pre SCM BuildStep, Redmine, SICCI, SSH Slaves and Xcode integration plugins, even though I’d get most things done by just adding a shell script. That gives me a build per commit, which is nice and reliable and brings the pain forward. Jay pain! ![]()

Of course, having this infrastructure in place begs for tests. Now I think tests for iPhone applications suck. Bigtime! The reason is that I hate deployment cycles. It takes time, and that time I’d rather use writing code, thinking about the application, solving real problems for my customer, preferably before he knows about them. If not that, I’d rather drink coffee, do chores in my home, or clean my pipes, rather than waiting for build cycles. It’s just an enormous waste of time. And tests for iOS drain time, as there’s no such thing as a unit test for iOS. Everything is an integration test or a user acceptability test. You always fire up the entire application before running any test.

So now I have that rant done, it’s great that I can leave my tests to Jenkins. It will perform them, and the output will get converted to what looks like a JUnit test so that it can get picked up by Jenkins’ tooling and be presented nicely. Jay! ![]()

Then we get to deployment. My clients communicate with me. A lot! This should be different like so, I changed my mind about this, I’ve found a bug if you do like this and that. It’s great! I love my users for this! It creates such a momentum! So how awful wouldn’t it be if I said “I’ll collect everything and give you a beta in three weeks”? Continuous delivery isn’t just delivery to me, it is to the users as well. For this I use HockeyApp. They’re a great bunch and really responsive, and while they just don’t support iOS 5 well enough yet, there is so much good there. My app gets auto-deployed up there and my client sees the new release, hits install and boom! Now he’s running the latest build! ![]() Crash reports get sorted by build numbers, and the guys at HockeyApp have told me they’re working at making the crash reports even more awesome! Jay!

Crash reports get sorted by build numbers, and the guys at HockeyApp have told me they’re working at making the crash reports even more awesome! Jay! ![]()

So how do I follow up on these things? I have to admit, I’m a cheapskate, so I use Redmine. I would use Basecamp, and I hope to be using it, it’s so awesome, but so far it’s not been worth the extra cost. The day it is, I’ll run and buy it quickly. My problem with Basecamp and Redmine? I just haven’t seen how I’d integrate it with my scrum sprints. Yet. I’m sure they both can, and I hope to learn from people that are wiser than me in this regard.

Finally, after a deployment to the appstore, I use Flurry to keep track of where my users are at, both in version of the app (why don’t they upgrade! This new version is awesome! I need to tell them more about it!) and the OS (really? They’re still on iOS 4?? iOS 5 has been out a month now! Oh well, not everyone is like me). Also, I’ve rolled my own crash reporting that, should I have failed horribly, the users can get in touch with me or the client, with a detailed log of what went wrong.

So, that’s my work setup this far, and I’m quite happy with it. It still needs better scrum integration. It’s still too many pieces that don’t talk sensibly together. But it’s getting better knit together, and I’m looking forward to seeing how Trifork does it, how I can improve based on what they have to teach, and how I can improve the way they’re doing it. It’s going to be great! Those guys are brilliant, and I love working with brilliant people.

Finally, if you’re in the Esbjerg area, working with iOS, get in touch with me. If you’d love to start working with iOS, get in touch with me! There’s an NSCoder Night coming up soon, biweekly I hope. ![]()

Follow Me

Follow me online and join a conversation